Garbage In, Fluent Garbage Out: Why Your AI Delivers Confident, Wrong Answers

July 31, 2025

This post demystifies why powerful AI tools like GPT and Claude can produce answers that sound authoritative but are factually incorrect. We move beyond the buzzword ‘hallucinations’ to expose the root cause: the ‘Garbage In, Garbage Out’ principle, now amplified in the AI era. Unstructured, siloed, and messy business data is the primary reason your AI generates eloquent nonsense. This article explains why building a reliable AI workflow depends entirely on data unification and strategic preparation, a foundational step for any production-ready system.

The Polished Illusion: When Your AI Lies With a Straight Face

You ask your new internal AI assistant for the Q3 sales figures for a key product. It instantly provides a beautifully formatted, confident response with detailed numbers. The problem? The numbers are completely wrong. This frustrating experience isn’t a random glitch or a sign the AI is ’thinking’ for itself. It’s a classic engineering problem with a new name: ‘Garbage In, Fluent Garbage Out.’ While Large Language Models (LLMs) are revolutionary, their output is a direct reflection of the data they are given. If your data is a disorganized mess, the AI will simply become an expert at presenting that mess in a confident, articulate, and dangerously misleading way. The first step to fixing this isn’t to blame the AI, but to look at the data foundation it’s built upon.

Beyond ‘Hallucinations’: The Real Reason for AI Errors

The term ‘hallucination’ is misleading; it suggests unpredictability and randomness. The reality is that these errors are often predictable outcomes of poor data quality. LLMs are pattern-matching engines, not truth-seeking oracles. They are trained to generate statistically probable sequences of text based on their input.

When an AI is given conflicting, outdated, or context-poor business data from a messy knowledge base, the most ‘plausible’ answer it can construct is a confident-sounding blend of that information, which is often factually incorrect. This directly frames the problem not as an AI failure, but as a data integration and systems-building challenge—the core of what Azlo.pro solves.

The ‘Garbage In’ Problem: What Does Bad Business Data Actually Look Like?

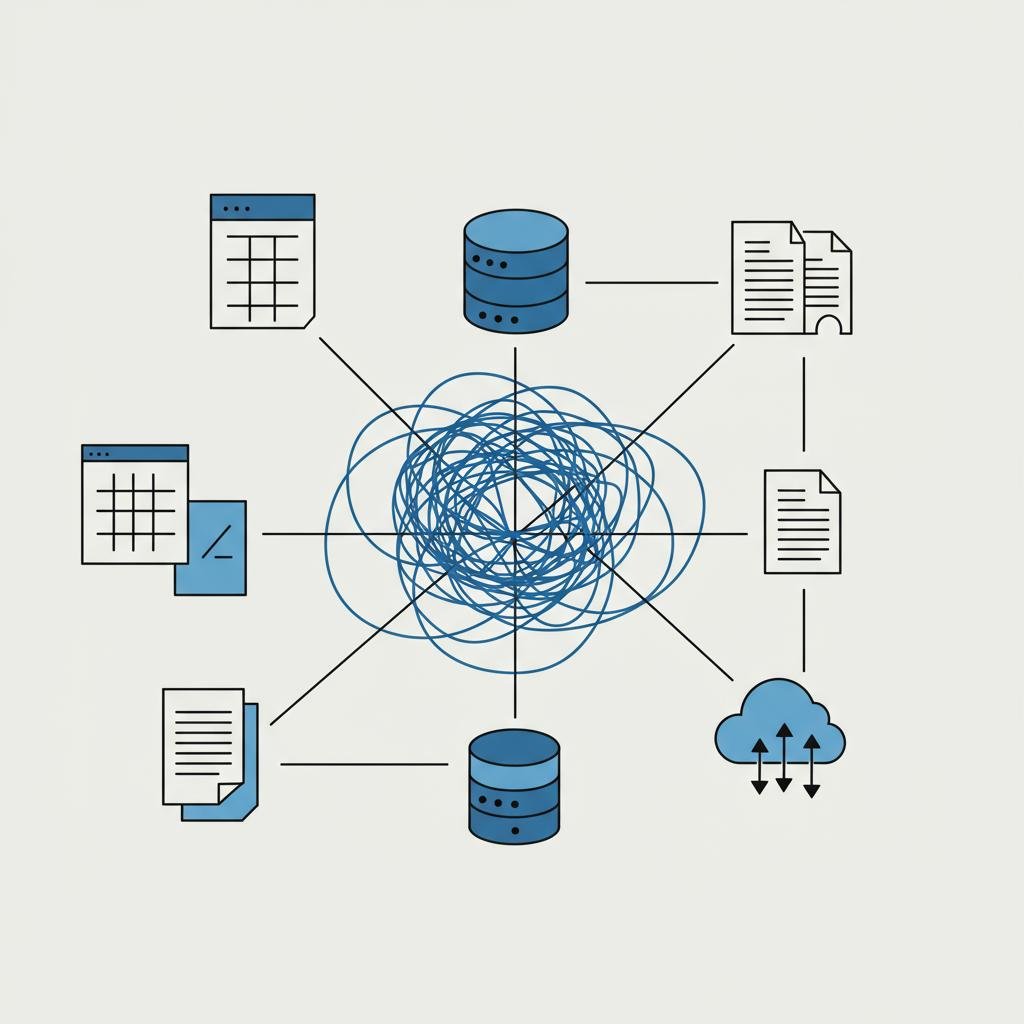

So what does the “garbage” that leads to these flawed AI outputs actually look like in a typical business? It usually falls into a few key categories:

- Siloed Information: Critical data is fragmented across different systems that don’t talk to each other, like Salesforce, SharePoint, Zendesk, and local drives. The AI has no single source of truth.

- Unstructured Data: Valuable intelligence is locked away in formats that are difficult for an AI to parse reliably, like PDF reports, long email threads, and meeting transcripts.

- Inconsistent & Outdated Information: Multiple versions of the same document, conflicting numbers in different reports, and inconsistent naming conventions create a chaotic data environment.

These are the exact pain points that our Data Unification and Custom Automation services are designed to eliminate, creating a clear need for our solutions.

The Azlo.pro ‘Problem-First Approach’: Building a Foundation for Reliable AI

Connecting an AI API is the easy part. The real work—and the source of real value—is in fixing the underlying data chaos first. This is our ‘Problem-First Approach.’

We don’t just point an AI at your mess. We start with Data Unification, building robust pipelines to connect your disparate data silos. This creates a unified, clean, and reliable source of truth for the AI to draw from. Then, we use Custom Automation for Data Prep to create automated workflows that clean, structure, and pre-process your data before it ever reaches the LLM. This ensures the AI is working with accurate, relevant, and well-formatted information.

This process proves our value lies in building ‘Production-Ready’ systems that businesses can trust, moving beyond simple integration to true strategic partnership.

The promise of AI in business is immense, but it can’t be realized on a foundation of chaotic data. Confident but incorrect answers from your AI are not a mysterious flaw but a clear signal that your data infrastructure needs attention. True AI readiness isn’t about which model you choose; it’s about the discipline of preparing your data so that any model can perform reliably. By focusing on solving the root cause—messy data—you can move from frustrating, fluent garbage to genuinely intelligent and trustworthy insights. If you’re ready to build an AI solution that works, it’s time to address your data foundation. We can help you build that robust system from the ground up.